AI is already making consequential decisions—about benefits, healthcare, policing, public services. Policy leaders are accountable for these decisions whether they understand the systems making them or not.

The Bottom Line

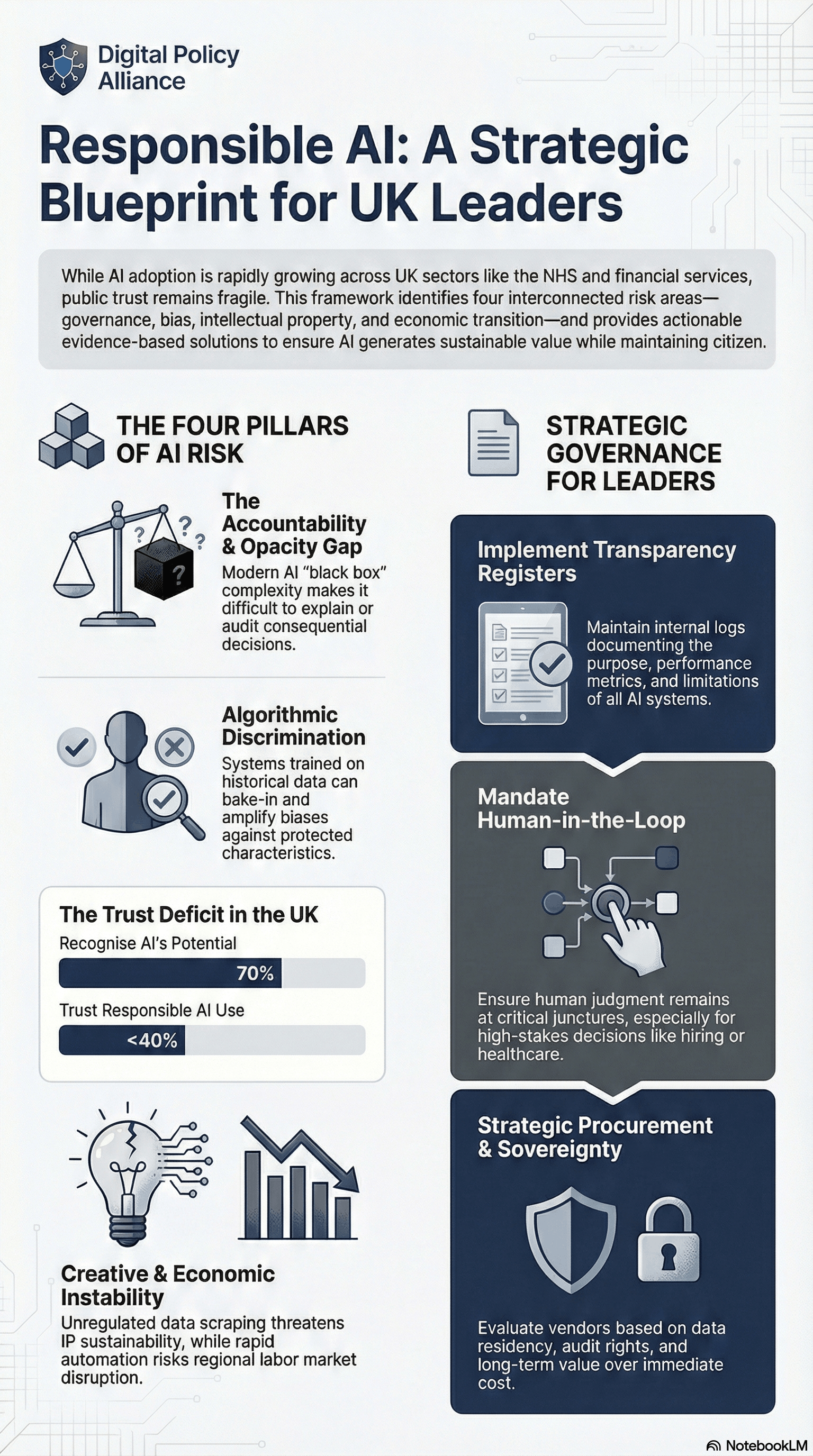

Responsible AI isn't about slowing innovation. It's about ensuring that when things go wrong—and they will—you can explain what happened, why, and what you're doing about it. Public trust is fragile: fewer than 40% of UK adults trust organisations to use AI responsibly.

Why AI Fails Differently

Traditional software follows explicit rules. When it fails, you can trace the logic and fix it.

AI systems learn patterns from data. They don't follow rules—they develop statistical associations. This creates fundamentally different problems:

Confidently wrong

AI can produce plausible-sounding outputs that are completely incorrect, with no indication anything is wrong.

Inherited bias

If historical data reflects discrimination, AI perpetuates it—not through explicit programming, but through learned patterns.

Opaque at scale

Modern AI has billions of parameters. Even creators can't always explain specific decisions.

Context-dependent

Systems trained on one population may fail badly on another. Performance varies in ways that aren't obvious.

The Four Dimensions of Responsible AI

1. Ethics & Accountability

The problem: AI systems making consequential decisions often lack transparency, human oversight, or clear accountability.

Real examples: Post Office Horizon—algorithms that failed to flag errors led to 700 wrongful prosecutions. DWP fitness-for-work assessments criticised for insufficient human review.

Questions You Should Be Asking

- "Can we explain the logic behind significant AI-driven decisions?"

- "When AI contradicts someone's interests, what happens?"

- "If something goes wrong, who is accountable? Is this documented?"

2. Bias & Fairness

The problem: AI can discriminate based on protected characteristics—not intentionally, but through biased training data or flawed metrics.

Real examples: Mortgage algorithms systematically disadvantage ethnic minority applicants. Benefits eligibility AI disproportionately denies support to disabled claimants. These outcomes weren't intentional—they emerged from historical data.

Questions You Should Be Asking

- "What testing occurs for bias across different demographic groups?"

- "Are we tracking performance by population, or only overall accuracy?"

- "Who has authority to stop deployment if bias is found?"

3. IP & Creative Sustainability

The problem: AI systems are trained on vast datasets often scraped without permission. Creators find their work used to train systems that may displace them.

Why it matters: UK creative industries are worth £126 billion. If creators can't capture value from their work, the ecosystem that produces training data degrades. Major litigation is ongoing.

Questions You Should Be Asking

- "Do we know where the training data for our AI systems comes from?"

- "What's our position on using systems with unclear data provenance?"

- "How dependent is our AI strategy on data sources facing legal challenge?"

4. Economic Transition

The problem: AI will displace some jobs while creating others. Managing this transition responsibly requires anticipation and investment in people.

The scale: Research estimates 20% of UK jobs face high automation risk in the next decade, concentrated in administrative, routine, and manufacturing roles. Risk is highest in regions with weaker economies.

Questions You Should Be Asking

- "What roles in our organisation are most exposed to AI automation?"

- "What's our strategy for the people in those roles?"

- "Are our AI procurement decisions building capability or dependence?"

Reflection

- Accountability: If an AI system caused significant harm tomorrow, could you explain what happened and why?

- Fairness: Do you know whether your AI systems perform differently for different groups?

- Sustainability: Are your AI practices building a healthy ecosystem or extracting value unsustainably?

- Transition: What happens to the people whose work is automated?

Key Takeaway

Responsible AI isn't a compliance checkbox—it's about maintaining the licence to operate. Your role isn't to prevent AI adoption. It's to ensure that when problems occur, you can demonstrate you acted responsibly—risks assessed, safeguards in place, accountability clear.

Go deeper: Ethics & Accountability | Bias & Fairness | IP & Creativity | Economic Transition

Visual Summary