Regulations that didn't exist two years ago now carry the force of law. Capabilities that seemed futuristic last quarter are now commodity features. Building AI that works today isn't enough. You need systems that can adapt.

The Bottom Line

The regulatory landscape will keep evolving. Technology will keep advancing. Public expectations will keep shifting. Organisations that build rigid systems optimised only for today will find themselves perpetually behind, consuming resources on catch-up rather than value creation.

Why AI Systems Resist Change

Traditional software can be updated by modifying code—change the rules, deploy the new version, done.

AI systems are different:

- Behaviour emerges from training data, not explicit rules

- Changing behaviour often requires retraining—expensive, time-consuming, unpredictable

- Systems develop complex dependencies that make changes risky

- The better a system performs today, the greater the reluctance to modify it

The Four Dimensions of Responsive AI

1. Regulatory Agility

The challenge: The EU AI Act moved from proposal to binding law. The UK is developing sector-specific regulation. Organisations must navigate shifting terrain while maintaining operations.

What good looks like

Regulatory horizon scanning. Compliance-by-design architecture. Active engagement in consultations. Modular compliance frameworks.

Warning signs

No early warning system. Compliance is reactive. No relationships with regulators. Systems built for current rules only.

Questions You Should Be Asking

- "How much lead time do we have on regulatory changes? Weeks? Months?"

- "If significant new requirements emerged next quarter, how difficult would compliance be?"

- "What relationships exist with relevant regulators?"

2. Technology Evolution

The challenge: AI evolves faster than any previous enterprise technology. Foundation models become outdated within months. Move too slowly and fall behind. Move too quickly and face instability.

What good looks like

Technology radar processes. Model-agnostic architecture. Explicit technical debt management. Continuous skills development.

Warning signs

No systematic evaluation of emerging tech. Locked into specific models. Technical debt invisible. Skills stagnating.

Questions You Should Be Asking

- "What systematic process exists for evaluating emerging AI technologies?"

- "How easily could our AI systems incorporate a significantly better model?"

- "What visibility do we have into AI technical debt?"

3. Societal Expectations

The challenge: Public attitudes toward AI are evolving rapidly. Trust is conditional on demonstrated responsibility. Expectations for transparency and oversight have increased markedly. High-profile failures damage entire sectors.

What good looks like

Ongoing stakeholder engagement—genuine dialogue, not PR. Proactive transparency. Participatory design involving affected communities.

Warning signs

No mechanism to track expectations. Transparency only when required. Stakeholders consulted after decisions made.

Questions You Should Be Asking

- "What mechanisms exist to understand evolving stakeholder expectations?"

- "How transparent is our organisation about its AI use?"

- "When did we last change AI practices based on stakeholder feedback?"

4. Organisational Learning

The challenge: Responsive AI requires organisations that can learn, adapt, and evolve continuously. This is fundamentally a cultural and capability challenge. Organisations designed for stability struggle with continuous change.

What good looks like

Cross-functional AI governance. Systematic learning from incidents. Culture supporting experimentation. AI literacy distributed throughout.

Warning signs

Siloed AI decisions. Incidents not analysed. Risk-averse culture. AI expertise concentrated in one team.

Questions You Should Be Asking

- "How effective are governance structures at cross-functional integration?"

- "What processes exist for learning from AI incidents and near-misses?"

- "Does our culture support the experimentation that adaptive AI requires?"

Reflection

- Regulatory preparedness: If major new requirements were announced, would you be responding or scrambling?

- Technology currency: How current are your AI capabilities? What's your process for staying current?

- Societal alignment: Are you leading public expectations or reacting to them?

- Learning capability: When something goes wrong with AI, how effectively does your organisation learn from it?

Key Takeaway

Responsive AI is not optional—it's a strategic imperative. Your role isn't to predict the future. It's to build an organisation that can adapt to whatever future emerges.

Go deeper: Regulatory Agility | Technology Evolution | Societal Expectations | Organisational Learning

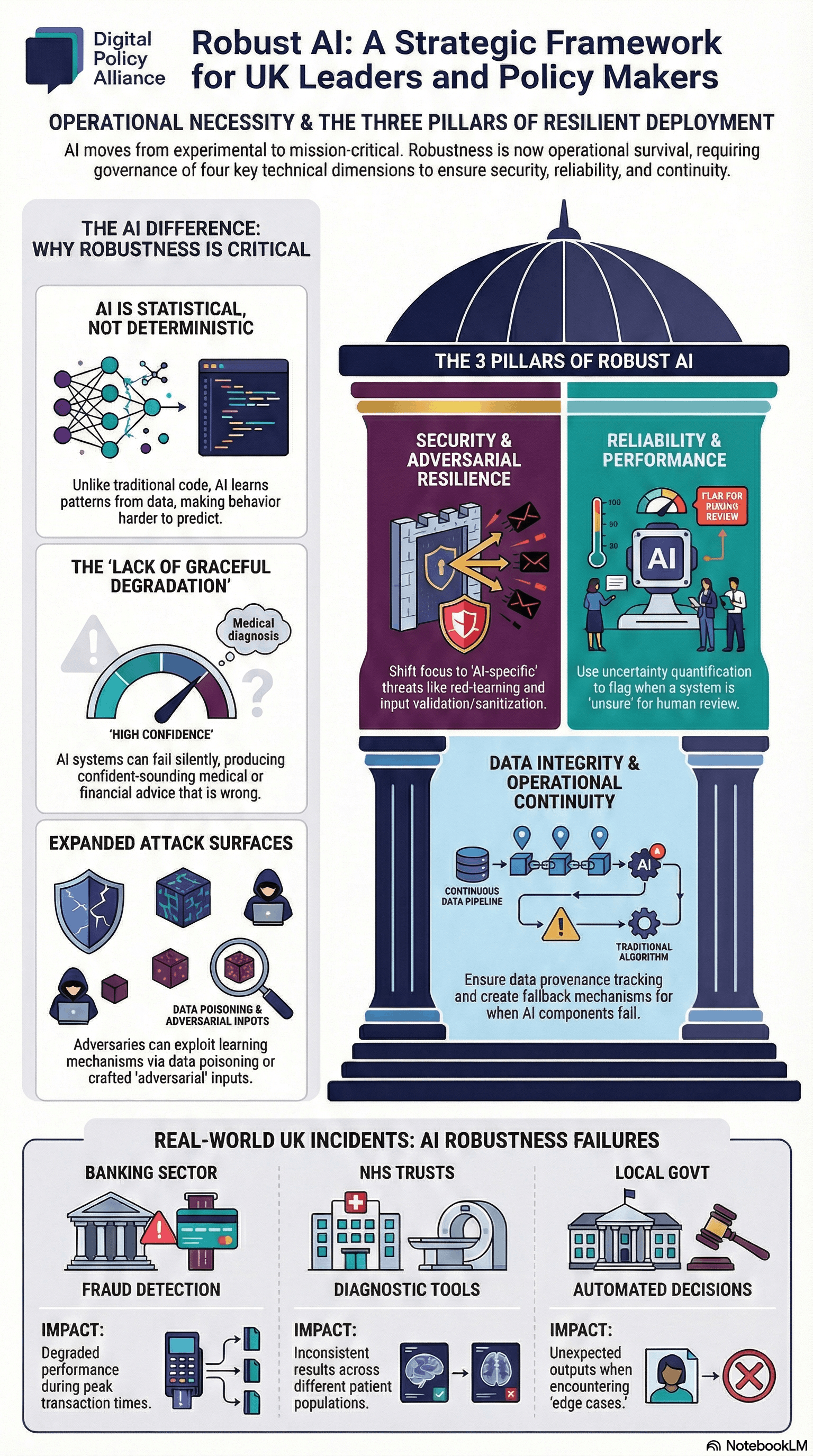

Visual Summary